An apology to subscribers: My intention is to write more frequently than I have the last few weeks. My delay in publishing new content has been due to the complexity of this specific piece for me compounded with travel and having a new baby. Thank you so much for your support and for reading.

This article in particular I had to reframe and rewrite a few times to get the tone right. The conversation around AI in the media tends towards generating interest through extreme response. I didn’t want to be overly alarmist in my tone. I have tried to land on a measured one instead, and let the experts speak for themselves.

What Exactly Is Happening?

If you don’t know, Artificial Intelligence is here and it is advancing at a rate that no one exactly knows, but is far greater than expected. In this essay, I want to first raise awareness around AI and then give a Christian response to it.

If you have no idea what AI is in its most recent and popular chat bot form, this is where we begin: There are numerous recent Interviews and presentations on the development of A.I. technologies, especially chatbots like ChatGPT and Google’s Bard. Session 2 of TED2023 was devoted to AI and the notes are worth reading through. The opening talk by Brockman illustrates some of the things Chat GPT can do.

A very well-done 60 minutes segment with Google showcased Bard and all it can do. The executives from Google help explain what the program is and what it isn't.

I really encourage you to watch those segments to get acquainted with it, but if you want a brief explanation it goes something like this.

AI chatbots like Bard and ChatGPT are programs which have used all the digital language data of the internet and even audio-made-language to learn. Behind them are mathematical systems called ‘neural networks’ which can learn from that the digital language to develop skills. These programs then use those patterns to begin to problem solve and learn how to interact with humans on almost limitless functions. You can ask it to write you a speech, make up recipes or give you life advice. You can ask it to help with research and to do math.

At first blush it simply sounds like a more complex version of Siri or Alexa. In fact, those technologies are AI, in that they listen to us and learn from us and present us with what they think we want on our devices and applications. But this new form of AI takes another step up.

What is different about this technology is in the name: it is intelligent. It works on its own. It learns. It grows in capacity. AI can even make stronger AI by creating its own language data to learn from. Researchers have discovered that their AI have capabilities far beyond what they ever expected months after the AI learned how to do them. New languages, math and chemistry, practical world knowledge—it is all being learned and improved upon at double exponential speeds. More on this in a minute.

The Warnings

The newest experimental technology based on Large Language Models has developed quickly enough that on April 12th, world leaders in tech signed an open letter calling everyone to pause AI experimentation on anything more powerful that ChatGPT-4. Why would they need to stop? In their words:

Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable. This confidence must be well justified and increase with the magnitude of a system's potential effects.

This may sound like an overreaction, but what the developers are seeing is that newly advanced AI systems, ones which can learn, adapt, and grow based on their source data, are impossible to control or predict. They are giant computers which run on their own, having been trained on data from the internet and more. That means human behavior is their model for what to be and how to act. And in chat based programs, like chatGPT, that can lead to serious consequences.

The situation is dire enough that Time Magazine published a letter from Eliezer Yudkowsky saying that pausing AI isn’t enough. He says it needs to be shut down. Yudkowsky is a national leader in machine intelligence research and is not shy about how negative he thinks the future outlook is. Even more recently, The New York Times published a piece about the ‘Godfather of A.I.” leaving Google so he can freely speak on the dangers of this technology. The very same man who won the most prestigious prize in tech for his work on neural networks, the foundation of this AI, is now working to curtail the downstream effects of its implementation.

This interview with Elon Musk is helpful in that Elon says very plainly what the dangers are without selling AI. The other players I’ve listed so far have an incentive to paint AI in a positive light because their companies will profit from it. Apparently he does plan to do so soon as well. Even so, within the first few minutes of his interview on ABC, the CEO of OpenAI, which owns ChatGPT, confessed that he is a little afraid of his own technology. The interviewer was somewhat surprised and when she pressed him on it he says, “I think if I said I were not scared, you should either not trust me or be really unhappy that I am in this job.”

Uh, ok? Say more.

Paul Kingsnorth, an essayist and environmentalist (who recently became a Christian) has laid out a fairly pessimistic account of what is going on with AI, but is asking some really good questions about what the impetus behind this is. If we aren’t sure where this is going and we don’t know if we can control it, why are we making it? He introduces a spiritual/demonic element towards the end of his piece that I’m not cosigning just yet, but I find intriguing.

Why the negative outlook? Why are people sounding the alarm, and what exactly could make AI so dangerous? Through Kingsnorth’s post, I became aware of this video called the A.I. Dilemma, a presentation given by Tristan Harris who put out the famous documentary The Social Dilemma. Check it out:

Much of this talk is chilling in its implications. What we have now in the form of iPhones listening in on our conversations, culling data on what’s happening in our lives, and then feeding that to advertisers so they can strategically place ads in our social media feeds to get us to buy the thing we were just talking about with our spouse, is child’s play. It’s the Model T Ford of AI. It’s the most primitive form of computer intelligence learning from human behavior and then responding.

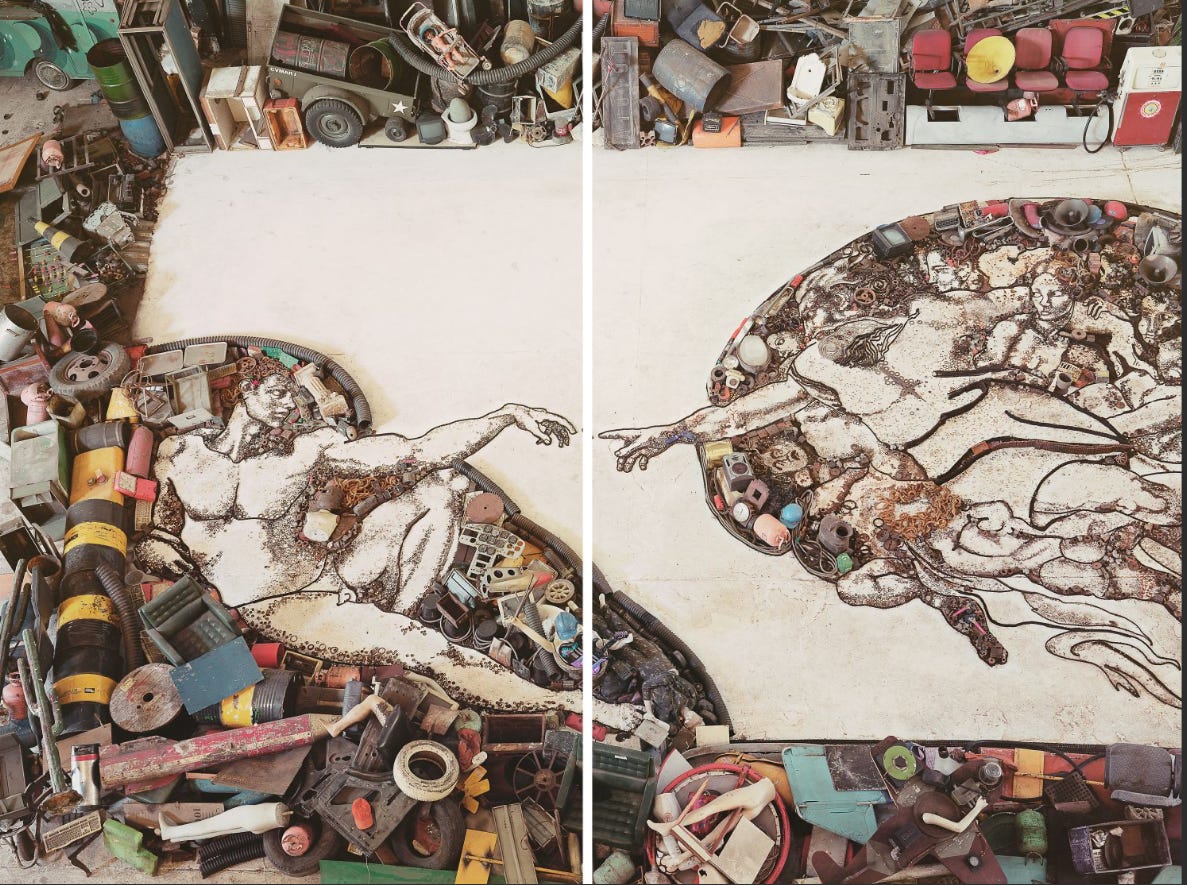

Harris makes clear that because of the vast amount of computing power and digital language data these machines have to work from, they will surpass humans in skills that typically set humans apart from animals and machines. He isn’t talking about doing Calculus. He is talking about persuasion, deduction, creative writing, visual art, and more. AI as it stands right now can write a better fiction narrative than the average American. It can certainly do better research than them, and it is on par with even some master’s level students in some fields. It does all of this at immeasurably faster speeds. The longer it runs, the greater its skill will become.

So of course, it is only a matter of time before AI takes over entire slices of the labor force, and it will certainly impact every single job and product in the entire world, in some ways definitely for good. In some ways definitely for worse. In many ways it is still unknown. This happened at the Industrial Revolution, it happened at the tech boom in the late 20th century, and it will happen again anytime technology is developed which fundamentally changes the way humans produce goods and do work.

But it is not as simple as going from plows to factories. What if a computer could create hundreds of fake social media accounts claiming to be scientists and convincing people of the need for a specific measure to protect themselves against a spreading illness? What if similar accounts were weaponized by foreign countries? What if companies and politicians had free rein to use such power for their own purposes? Has that already happened?

It is clear that measures need to be put in place to limit the use of AI very quickly. Unfortunately our regulatory agencies and governments are about a decade behind, and do not show the agility or comprehension to deal with this issue.

This conversation on the BBC is an example of people in the industry trying to raise awareness around the need for policy and conversations on how to deal with the application of this new technology as quickly as possible, and with people who have the right expertise.

In summary, what we have are highly complex and advanced computer programs that are trained on data and then are able to learn and improve at problem solving. This problem solving has taken unforeseen forms and is largely unknown in ts potential, and the risks are tremendous without the right measures. Additionally, these programs have the capability to exhibit behaviors that are human-like.

AI and Being Human

When an interviewer finds out about emergent skills and the ability of AI to do human tasks better than humans, they almost without fail move to ask the question we all are wondering. Is it conscious? Is it sentient? Does it have intentions beyond our control? Speculative banter centers around discussions of intelligence/sentience, consciousness, and if it is a danger. As I was writing this, Plough magazine sent out this transcript of episode 55 of their podcast where they discuss these very things.

In the case of these tech industry leaders, I’ve only ever seen one very clearly say ‘no’. The rest flirt with the concept of AI consciousness and use terms like sentient, conscious, and moral very casually and without a clear definition. We have to remember that those who are on the leading edge of developing this technology are trained in their science and are not philosophers or theologians. They are rarely stepping back to ask questions of moral imperatives with the same ideals as Christians. They are rarely questioning their presuppositions about what is real and what it means to be human. Much of our western world is functionally metaphysically materialist. That is, we act as if all there is is what we can sense and observe. For tech leaders like Elon Musk materialistic atheism or agnosticism is their self-designation. Buddhism, with a Hollywood lift, is having a day with liberal west coasters who love how big a deal it makes of the individual finding ultimate meaning and direction from within. Even in a pop-pseudo spirituality like this, the world is all there is, and anything spiritual resides inside you and I, not ‘out there’.

All this is to say a Christian appraisal of AI is starting from a fundamentally different place than a naturalist or materialist account is. What we think is real is different from someone who doesn’t believe there is a supernatural realm or mode of existence outside our own.

So before we ask about what AI is, we have to ask what a human is. The Christian account of human origins and destiny is that we come from God and will return to God. It says that we are made in God’s image which means that we are one part material and one part immaterial. We have souls. This is largely an undoubted assertion across human cultures and time, but in the Christian faith it is rooted in the free and loving creative act of the one Triune God. We are moral and personal beings which means we do not simply operate like animals on instinct and self-preservation, but we operate on reason, pursuing relationships and seeking to do what we believe will lead to the ultimate good.

In a purely materialist or naturalist worldview, nothing is anything more than its physical matter. In this paradigm, of course a supercomputer with advanced AI could achieve the kind of natural interactions in the world associated with human intelligence and awareness and beyond. That relational and moral character will seem to be in place in the AI with which you are interacting. But this is not what we mean by being conscious beings. A computer will be able to act like it is conscious based on programming and imitation. But it will not have an immaterial soul. A computer is made of natural materials and will always be purely material no matter how complicated a simulation of human activity it can do.

But this is not obvious to everyone. Even the way this is being reported has taken on a mystical tone. Above I mentioned the NYT article on Geoffrey Hinton leaving Google to speak out on A.I. The subtitle of the article says that he “nurtured the technology at the heart of A.I.” You’d think that he had raised a hen from a baby chick, or rescued a lame dog and nursed it back to health. It’s code. The man wrote code. But already the metaphysical and life-adjacent language is used for the mathematical formulas and the GPUs that make up the AI’s engine and function.

I have a friend who asked seriously, if humans can procreate more humans with souls, why is it so far fetched that we could create machines with souls? We have to reckon with what is happening in the conception and birth of a new human. In conception and gestation, a human is created and formed from the physical matter of two parents, but it is not so obvious that the soul comes from that process. It would seem, from the language of Scripture, that God creates the soul for each person at conception. Jesus is our clearest model for this. The person Jesus was fully God and fully man at conception and his full humanity includes the immaterial soul which is part of any human’s existence.

So, unless God decides to do something similar for machines powered by computer code and mathematical networks, there is a fundamental metaphysical gap between us and everything else in creation. That gap is the presence of a soul and the ability to relate to God personally.

Using AI and Its Dangers

With all the warnings of impending doom and worldwide take over of weaponized AI, there still currently remains a very simple antidote: You get to determine how much you interact with smart technologies.

There is an extent to which you and I are wrapped up in a world and society that has been and will increasingly be technologized. There is no interview or talk that I’ve yet found where anyone says, “stop using smartphones and computers and you’ll be good.” The assumption is now that to live a life of any meaningful productivity in our world, you have to be connected, despite the longing for disconnection our world is clearly feeling all the time. But does it have to be this way?

An uncritical acceptance of any technological innovation could have unintended and unforeseen consequences. It is well documented that we have totally botched mobile connectedness, social media, and the first wave of A.I. contact. The large-scale social experiment of smartphones has been an abject failure. Loneliness, anxiety, developed ADHD, social divisions, the spread of false news, overreaction to partial news, entrenched partisan politics and social division, and, and, and. Throw COVID on top as the final layer and it is obvious to anyone with a pulse that our relationships and social atmosphere in America, if not the whole world, are in an unprecedented state, suffering under the pressures of too-fast movement across too-large a scale. Ultimately we have been forced out of humane, personal connection into mediated socialization across an abstract, unimaginably large technological sector. All this is done with no grounds for knowing what is real, what is accurate, what is meaningful, or even why we should be using the technology in the first place.

But actually we do know why. It is because there are companies which profit billions off monetizing our attention and they have perfected the cultivation of attention addiction. One would think that with such powerful technologies we would need to work less, not more. One would think we could have more free leisure, with the ability to connect, create, and live. Yet we are increasingly using our leisure for addictive entertainment, if we can call it that when it makes us so mad so often. and it is wrecking us.

Christians have an answer that is categorically different. Set your minds on things above, not things that are on the earth. Do not be conformed to the pattern of this world, but be transformed by the renewal of your mind. What you give your attention to is what you will be like. What you behold you become. What you look at you will love. The very simple act of critically evaluating the worth of something that calls for your attention before engaging is the gate that may help keep you from diving off the deep end of doom-scrolling

When AI begins to make its way into every form of technology, transforming how we use various tools and how various industries are run, we have the opportunity to ask, is this wise? Does the promote whole human flourishing in community? Does this help us to create and live as complex and vibrant human beings? The worst thing we could do at the advent of this new technology is thoughtlessly adopt it without stopping to evaluate if we should.

Insightful and thought-provoking!